Underwater Virtual Reality Pioneer Casey Sapp

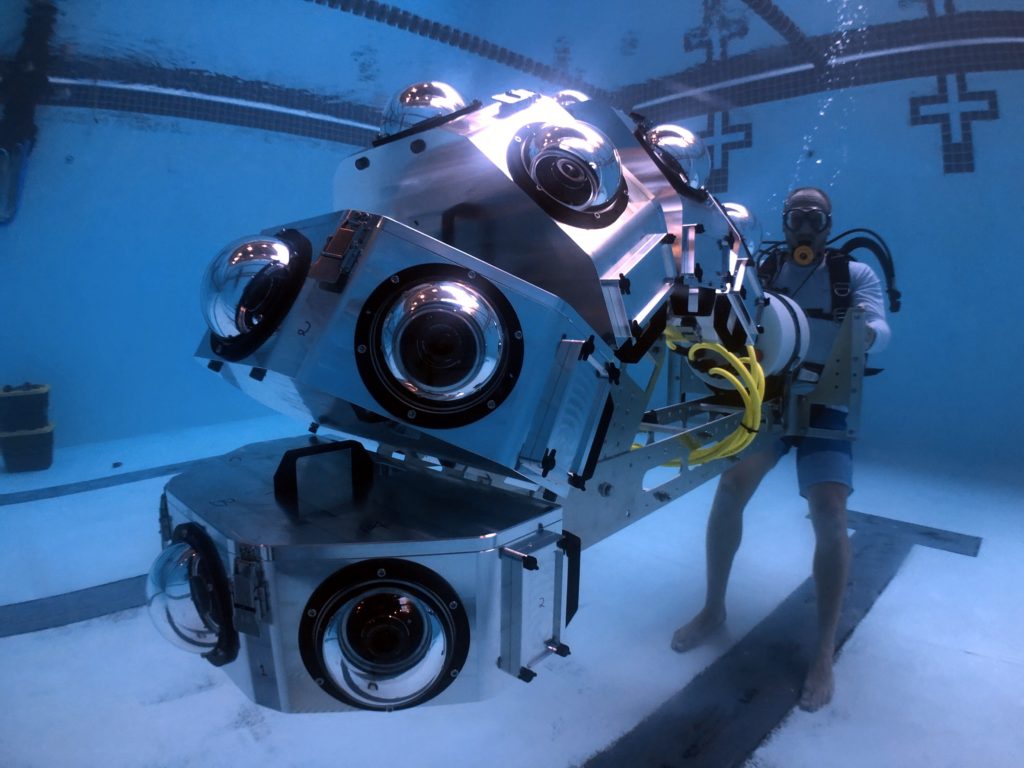

In episode twenty two, host Brett Stanley chats with Casey Sapp – a pioneer in underwater Virtual Reality. Casey’s company VRTUL designs and builds some of the most amazing camera arrays for capturing 180 and 360 degree underwater experiences.

We chat about how he got started in this industry, and some of the very cool clients he’s had. There is a bit of technical jargon in this episode, but you should be able to follow along fine without knowing all the terms.

Follow Casey/VRTUL: Website, Facebook, LinkdIn

Discuss the episode in our facebook group.

Visit our YouTube for livestreams

About Casey Sapp – Underwater Virtual Reality Pioneer

Casey Sapp is both CEO of VRTUL (Virtual Reality Technology Underwater Limited) focused on technology development and production services for underwater cinema, and Blue Ring Imaging designing and manufacturing underwater camera products. VRTUL’s clients include the BBC, Discovery, National Geographic, Good Morning America, Monterey Bay Aquarium Research Institute and many others at the cross section of cinema and science.

Some of Casey’s inventions include the first 360 3D underwater camera system, the first underwater 360 live broadcast (GMA), the first ROV VR piloting system (MBARI), the first 360 cinematic camera on a submarine, and the highest resolution underwater cinematic camera system in the world (MSG Spheres).

In 360/VR storytelling Casey has played many roles as a Producer, DP, Camera Tech, and Post-Supervisor. Most notable productions include: Into the Now (2019), Our Blue Planet VR (2019), Into Water (2019), Immotion Group Blue Ocean Series (2018), Hydrous Immerse (2018), Sharkwater VR (2018), Mako Madness (2017), and Orca 360 (2016).

Podcast Transcript

Ep 22 – Casey Sapp

Brett Stanley: [00:00:00] Welcome back to the underwater podcast. And this week we’re putting on our virtual reality headsets and chatting with Casey SAP, a pioneer in underwater VR. Casey’s company virtual designs and build some of the most amazing camera raise for capturing one 80 and three 60 degree underwater experiences.

Which had about how it got started in the industry and some of the very cool clients he’s had, There is a bit of technical jargon in this episode, but you should be fine to follow along without knowing all the terms. All right. Let’s dive in virtually.

Casey, welcome to the underwater podcast.

Casey Sapp: [00:00:33] It’s good to be here, Brett. Good to meet you virtually.

Brett Stanley: [00:00:37] Yeah. I mean, everything’s virtual these days, which is kind of a really apt way of introducing you because you are the CEO of virtual incorporated.

Casey Sapp: [00:00:46] it was a cool name five years ago. Uh, and, I am the CEO of virtual that’s right. So virtual is my, my strength and, um, I’m glad to be here.

Brett Stanley: [00:00:56] so what is underwater virtual reality? I mean, that’s, that’s kind of what your, your life is right now, I guess. Um, Well, it’s from the last few years that I’ve known of you, it’s been building these underwater virtual reality cameras and everything that goes along with that. Do you want to talk us through what that actually means for your, your day to day

Casey Sapp: [00:01:14] Yeah. So, uh, I live in Encinitas, San Diego, and I’ve been here for about seven years and. Just the quick pitch today is that virtual stands for virtual reality technology. Underwater limited. We focus on multi-camera prototype, typically one of a kind, uh, housing designs. And our focus has been over the last couple of years.

Very, very high end high resolution. Tons of cameras, very, very heavy, um, large camera technology. And this has been used in theme parks, don’t projections, virtual reality experiences, and what’s considered mixed reality all over the world. Um, so our clients over the last 10 years have been Google, Facebook, Oculus.

Uh, SeaWorld, uh, even even space companies, uh, because if you can do it under water, you can, you can do it in space.

Brett Stanley: [00:02:19] Yeah. So for me, like growing up, like virtual reality, kind of mint, terrible graphics and. You know, like a, sort of a headset, but you’d be in a virtual world, but for you guys, and maybe this is how the term is used these days, it’s, it’s more like three 60 degree cameras and stuff, right?

Like it’s not, it’s not, you’re not generating fake worlds. You’re actually bringing in the real world to people via headsets. Is that right?

Casey Sapp: [00:02:45] it’s a dream and the, the dream is the holodeck and we do want photo real multisensory experiences, but there are a lot of hardware and software limitations to do that.

And I think. About six years ago, virtual reality has come and gone over the last couple of, even in the fifties and sixties. And then there was a, Oh, an excitement in the nineties with lawnmower man. And, and then it came back in 2015 with Chris milk and his company verse, which I riffed off of with my company.

His company was VR and mine is V R T U L. And yeah. He, he has some really interesting Ted talks and he really inspired a new generation of immersive filmmakers. And VR years in this industry are a little bit like dog years. And there have been a number of companies which have come and gone over the last five to six years, but a few have remained and a few have really found their niche and professionalized.

And they’re, they’re doing training, they’re doing camera nearing volume metric. There’s a lot of definitions in a lot of terminology that falls into the, the immersive space. And the way I like to explain it is that. In English. There’s one word for smell, but the Eskimos have a hundred and there’s what’s snow.

There’s yellow, snow. There’s dark, snow. There’s drinkables. And so VR. Yeah, it’s an all encompassing term for. What is immersive and people can use mixed reality. ultimate reality. There’s XR there’s AR, but the focus of today and the focus of our conversation is really about one 83 60, both monoscopic and stereoscopic experiences, which can feel like they are photo real.

Brett Stanley: [00:04:51] Yeah.

So you guys are not taking any kind of computer generated graphics. You’re not kind of creating any worlds. You’re just taking what you’re capturing in the real world with your cameras and then stitching. Altogether for, for the viewers, you know, you’re not really kind of creating any, anything.

That’s not already there.

Casey Sapp: [00:05:08] That’s right. Yeah. My company’s focus isn’t about heavy CG experiences. There are a lot of really great VFX studios out there. We’ve been focused on live action and, and really capturing what is real. There are CG elements. Ironically shoots are very, very VFX heavy. Uh, you have to go into the, uh, mindset and these productions of what is going to need to be wrote out.

What’s going to need to be painted composited the different depths. You’re you’re looking at these productions in terms of frames. So ironically, well, it is live action. The best. Techs and camera ops have a visual effects understanding, um, too, because if something gets too close to the cameras, you have to recreate it.

And then you’re back to CG again.

Brett Stanley: [00:05:58] And so, so that’s what you’re doing. So it’s the post production, just kind of making it work in terms of what you’ve recorded, as opposed to adding something in that possibly wasn’t already there.

Casey Sapp: [00:06:08] Right. Yeah. So the, the multi-camera world is it’s to me, I think it’s a different world entirely than traditional video or photo it’s. It’s kind of a third, medium. There are some major differences with multi-camera productions than normal, uh, productions, and there’s some benefits to, um, I think I’ll just leave.

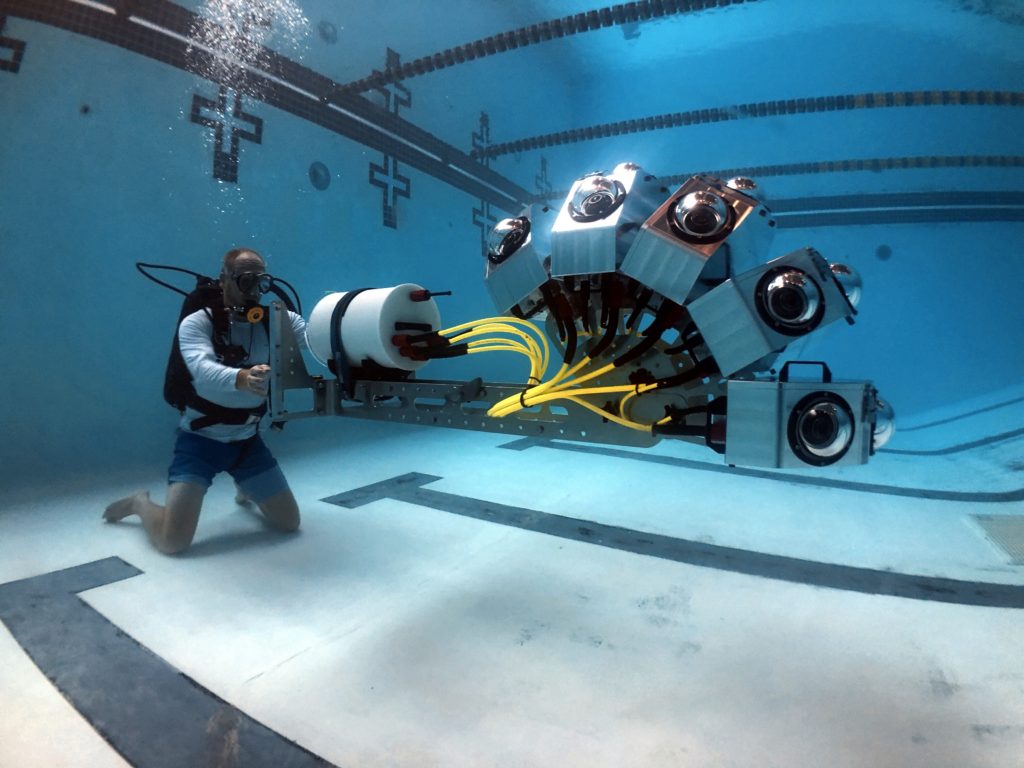

That’s some of the differences and, um, and we can go from there, but for a while, there’s, there’s tons of data. So when you’re doing these high end professional productions, the cameras are shooting a hundred gigabytes, a minute, 200 gigabytes a minute. And you, you have to go in with the mindset that post-production is really the most expensive part of this whole process.

Um, shoot it. And the second is if one camera goes out, if one camera fails, the shot fails. So everything needs to be Bulletproof from end to end. Um, and you can’t recreate a camera if it’s gone, then. Um, you need to go back to the drawing board. Uh, another thing is that I’m finding compared to like a traditional high end maybe movie shoot with a list actors.

In in this multi-camera underwater space, there are actually some concessions which you have to make with top the line cameras, like an RD or even a monster Hill. And it’s not always budgetary reasons. It’s the size of the camera and the larger the camera, the harder it is to stitch. So even if I had an infinite amount of money, the.

The stitching, if it’s impossible or if there’s too much parallax, then you can’t finish the job. And so there’s this really weird set of trade offs that you have to make, even with the large budget that you need to choose. These. Square modular. Um, you know, camera’s like, as he can eat too, uh, or a black magic micro studio or micro cinema, if you’re going to do something that’s, that’s high resolution and, and even the red Komodo now it’s unfortunately just a little bit too large and we’ve, we’ve looked at it extensively.

So it’s a really interesting, and then, you know, another thing is that, uh, when you’re shooting, you’re shooting towards the horizon, you’re not pointing you’re, you’re not looking up. You’re not looking down, you’re not trying to chase something. You’re literally trying to catch it. Ambient scenes and the framing.

Has to happen either in post or it has to happen, you know, with the talent itself, the camera doesn’t really play a lot. Into that and so on. And then lighting there. The lighting is a whole different problem in having the, or in frame because the camera cameras capture everything and the cameras capture the lights.

So trying to figure out how to paint those out or roto those, or, um, is, is sometimes, uh, a concession, which, um, Which people aren’t comfortable making financially. And so oftentimes people shoot with, with little to no light and just ambient, um, maybe the footage. So that’s, that’s kind of the major differences in the lens that we see this medium and how we plan productions.

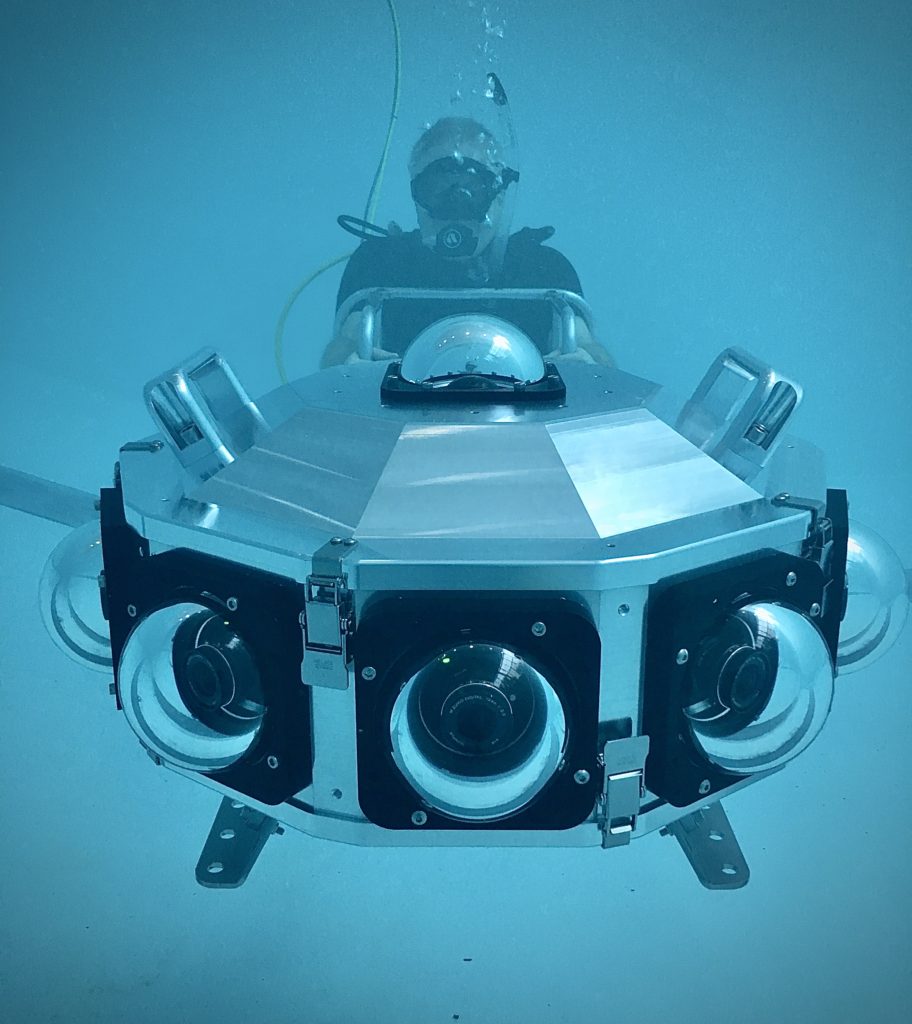

Brett Stanley: [00:09:25] So, so there’s a lot to unpack in that. Um, and for people that don’t know really what VR and three 60 kind of looks like from a technical point of view, um, it’s an array of cameras, right? So like, if you’re doing a, like a one 80, you might have like, I don’t know what two or more cameras set up so that they’re looking in different directions and recording all that scene in 180 degrees.

And then you can stitch that footage together to make an immersive controllable environment for the viewer. Is that right?

Casey Sapp: [00:09:55] That’s right. Yeah. Yeah. So, I think it’s important for me to give, uh, respect and, and. And referenced the pioneers of really big, large camera systems like Howard hall and Bob Cranston. And I don’t know if you’re familiar with the history of the, the IMAX underwater camera.

It was, it was almost a time. It only shot for three minutes and they, these guys had to figure out how to travel with this thing all over the world to capture behaviors and shoot, you know, what, what we know and love and all of the, the domes and planetariums around the world. And so once the technology got up to eight K sensors, the helium and March throw were used for a lot of these planetarium experiences and large format sometimes in operas with the, the weapon or some type of 5k or six K camera.

But now we’re getting into this space where. Led technology is outpacing the camera systems. So, so what we’re finding is that you do shoot one 80 and there are single camera systems that can shoot one 80. But they can’t shoot one 80 at 15 or 20 K or raw or 60 frames a second. There’s an, and so there, there are different arrays of different configurations of three 60, one, 8,200, you know, Maybe a large vertical resolution, small, horizontal, a wide horizontal solution resolution, maybe like 20 or 30 K by three K.

And, and there’s a number of different configurations that we create based on how the display technology works.

Brett Stanley: [00:11:40] Right. And are you, are you basically setting up the, the camera configurations and everything, knowing where it’s going to be presented?

Casey Sapp: [00:11:47] That’s right. We, we work with the end in mind and it’s, it’s really hard to make, uh, uh, do it all camera system for the, for the budgets or, you know, for the display, for example. And I know we’re getting very technical very fast, but I think it’s important to differentiate how different this medium is.

Brett Stanley: [00:12:10] yeah, go for it.

Casey Sapp: [00:12:11] If I’m going to, I have a project that’s that’s over 20 K.

It actually is about 20 K. By three K and I actually have to shoot with the unprocessed cameras over 60 K, uh, because of the, the lenses and how much pixel loss happens on the sensor, the convergence between the images and a number of other things that happen downstream. You’re, you’re losing an insane amount of data from start to finish and.

And so when you’re shooting with 60 K or 70 K in order to get a 20 K image, um, you really have to think about the display and if it’s going to be worth it, because you don’t want to, you don’t want to overdo it if you don’t have to.

Brett Stanley: [00:12:56] So what sort of projects have you guys been doing? Like you’re talking about big 20, 20 K displays and stuff. What is the range of clients that you have.

Casey Sapp: [00:13:04] We have a couple of buckets and the buckets range from very fast turnaround, social media, three 60 experiences and virtual reality headsets. There are video apps. If you go into a headset where you can watch these different immersive stories and there’s new live streaming platforms that are coming out.

And long form storytelling. That’s really saying we have a dome set of clients which want to do very, very sharp edge to edge a K the experiences

Brett Stanley: [00:13:40] And when you say dome, you mean kind of like, like going into like a planetarium sort of

Casey Sapp: [00:13:44] it could be. Yeah, or a little bit even larger, maybe something that is, is almost spherical in nature that even it’s almost like walking into a soap bubble, something like that. So a dome could be, could be something that is physical in nature. It could be 180 degrees, 250 or 300 degrees. And, and then you have these large led displays, which are flat.

And, and maybe have different curvatures, but they aren’t meant for a headset. They’re not meant to be immersive. They’re just massive. And, and so you build the camera system and the workflow to those budgets because each one is very, very different.

Brett Stanley: [00:14:28] Yeah. I mean, I must be very interesting to kind of get a client and come to you with a project and then work out how to build a camera to fit that because you do everything bespoke, right? Like everything is custom, mostly for each client.

Casey Sapp: [00:14:42] That’s that’s right. So in, in 2015, we, I started this company and I had this idea to do a software app that a mobile app that was like the YouTube for immersive experiences. And very quickly I realized that was a. A poor idea. And I saw, I saw that people were making money, doing these immersive productions in that no one really had any idea how to do it and that everyone was just making it up and throwing themselves sells off as, as the experts.

And so I. There. I was like, okay, if we’re going to make money, maybe this is the way to go. It’s just building camera res. Yeah. And I hired a guy from legend. Named Matthew to John who was, uh, an amazing generalist. He could do anything from help, 3d print, the arrays to help build post-production workflows.

He’s at black magic now. And, uh, We started to, to pitch these very, very high quality 3d three 60 experiences. And then we began to concept underwater, 3d arrays, and very quickly the, the opportunities that became. Available to us were to build these arrays, um, with, with GoPros and with three D printed mounts and just jump in and just see what we could capture.

And, um, everyone was trying new things. And so we were getting paid to R and D and it being in the water and getting paid to innovate was a dream come true. And then, uh, we got to the point, we were asked to, to do a full three 60 3d production in 2016. And it, we had to build arrays with over 30 GoPros and processing that footage and synchronizing it with so much of a headache.

We said, if we ever do this again, We are going to hire and find the best camera engineer to help us build something. And, uh, uh, and so we, very painfully got through it. It ended up being a successful theme park attraction at SeaWorld San Diego for a few years. Yeah. And, uh, and then we have a guy named Jeremy Childress and Jeremy Childress is to me is one of the best Marine engineers in the world.

He’s based in Oregon. He runs a company called the Sexton corporation. And, uh, over the last few years, Matt has transitioned out. Jeremy has become, uh, my partner and what we do is we really blend the creative and the technical to do both bespokes to the systems. And Jeremy has the machine shop. He has the Marine engineers.

He has the assembly. He has the wherewithal to, for, for networking and electrical. And, and through that, we’ve been able to build these systems very quickly, very rapidly and very successfully for a number of clients.

Brett Stanley: [00:17:46] That’s so cool. so you’re actually having to design these, these camera rays, but then also design the housings to go around them. Does that, does that change your. Your restrictions on what you can do in terms of laying out the array.

Casey Sapp: [00:17:59] It does. I think a lot of people think that what you can do above water, you can do below water. And it’s things become very heavy, very quickly. They become hot very quickly. They grow in size. They become hard to travel with and, um, you know, trying to take. A, maybe a certain lens above water, a fish eye lens that people really like using doesn’t necessarily work underwater, or you lose too many pixels beyond the one 80, because a lot of domes don’t go beyond that.

And yeah, there are a lot of different rules in Marine engines and development that people take for granted. And they think that you can just. Put a camera in some type of a limited box and it’s going to work. And even the optics around fixed focus lenses. And, um, it is, is a completely, it’s a world where people spend their whole careers.

Specializing.

Brett Stanley: [00:19:01] Yeah. exactly. Yeah.

Casey Sapp: [00:19:02] so I I’m by no means the expert in any of these areas. I, I, I’m lucky to hire a really, really amazing and talented guys to develop custom underwater optics and help figure out, you know, where the, where the focus ranges are. Um, but it’s, it’s a different world than single cam productions and definitely terrestrial productions.

Brett Stanley: [00:19:26] Oh, we can totally, I guess you’ve got so many different factors. Like you talked about, you’ve got the, you’ve got the size of things. You’ve got the weight of things. Um, and it’s not like you can just build a, like a big like glass bubble and fill it full of cameras. Cause then you, well, how are you going to move that around and how are you going to sync it?

So there’s all these sorts of things to keep in mind.

Casey Sapp: [00:19:44] We we had, we’ve had a number of people over the years, ask us to try and put the Nokia OZO under water or the Insta three 60 camera, which is these, this spherical ball integrated camera with a really nice tight post-production workflow. But because they’re fixed focus lenses, you would have to build this fishbowl.

Type of, uh, almost Quarium that’s three or four feet, large custom glass figure out how to travel with that, figure out how to control the camera, keep it buoyant and, uh, keep it from overheating and not to mention, you actually can see the reflections of these cameras when you do these bubble housings and it is, it’s a mess.

Um, so what we’ve done is the way we think about it is. Custom camera arrays, where you treat all of the cameras, like one camera, the old method of doing underwater three 60 and even terrestrial level stuff was you set all of the cameras to the same setting. There’s no global control system. You press record on all of them.

You clap sync, maybe you do some type of audio sync, and then you. Run them till the cameras die. And what we take the approach of doing is if we had to do that, You know, as a career, we would, I’d rather be doing something different than just pressing record and stop and all these,

all these cameras. And so what we try to do is we try to build many computers, raspberry pies, uh, many GPS.

It depends on the system where. We can get a preview that represents all of the cameras. We can see all the cameras running and we press, uh, you know, when we do an exposure setting or an F stop change, they all change. And when we’re recording, we’re recording judiciously. We’re not running them. You know, these one terabyte.

SSDs or 512 gigabyte C fast cards till the end, because that would just take weeks to be able to review the footage. So what we try to do is we try to limit the record times and speed up the, the stitch to preview, uh, turnarounds to within a few hours or a day. And there’s an, and. That is as close as we can possibly get to the way that, uh, folks in Hollywood and doing a list productions would, uh, would get it, can get it’s it’s you can’t really get more than a single preview of, uh, of one camera or, or even a stitch preview underwater.

Um, and it’s, uh, it’s different. It’s just different.

Brett Stanley: [00:22:26] And so are you saying that you do get a stitched preview while you’re down there or you’re, or you’re not?

Casey Sapp: [00:22:31] No, it’s, it takes a, a very, very heavy GPU process with like an Nvidia 10 80. Card and, um, and like what’s considered a VR ready computer. And to put that in its own enclosure is a huge pain. And so what we try, if we were to do a alive stitch feed to the surface, which there’s a lot of projects that are coming onto the scene to do that, you really want to do all of the heavy GPU processing at the surface.

Uh, but if you’re just going to do something that’s self contained. Blue chip natural history running gun. You’re just looking at individual camera feeds and then doing all the processing later and seeing if you got it,

yeah.

Brett Stanley: [00:23:16] so if you’re operating the camera under the water or you just kind of, uh, you know, before you start recording, obviously yeah. Do you just cycle through all the cameras and make sure they’re all recording and everything’s good. And then.

Casey Sapp: [00:23:27] Oh man. Every time the camera moves from, from the hotel room to the dock, the dock to the boat, the boat underwater you’re checking you’re you’re you’re you’re trying to figure out is this thing still working? Is everything still still plugged in? Because one, one movement of a cable. Is is the difference of the whole array.

Like I said, working or not working. Um, there is constant review, uh, constant self-checks maintenance support. Um, and, and, and there’s a methodology we’ve refined over the last few years, um, to ensure it’s Bulletproof and we, and we’ve been successful, but. I had this project five years ago with Michael Mueller.

And he’s a well known photographer in the Hollywood space who does a lot of movie posters and works with celebrities. And he’s a great underwater photographer as well, very passionate. And he wanted it to go on this worldwide tour of. Hitting 10 or 12 destinations and creating a series called into the now uh, we were a little bit early on in our professional camera.

Making experience. And we went with the black magic micro studio cameras, which had an external recorder. I don’t know if you are familiar with that camera, but didn’t have it’s a, it was a 4k 30 frames per second. Uh, uh, pro Rez for two to camera, which at that time was the best camera available for these types of rigs, but it only did external recording.

So you had these flimsy little cables that attach the data to the sensor and sure enough. Uh, I, I, Michael was patient and, and he was, you know, resilient and, and we, we figured out over time how to, how to handle this. But every time the boat hit away, one of the cables. Would come out. And so we were doing surgery on the, on these cameras constantly on the boat, in hot moist air and, you know, in the sun.

And it was just, it was so painful.

Brett Stanley: [00:25:41] And So

you’ve got to crack open the housing and find the cable that’s missing and put it back

Casey Sapp: [00:25:45] yeah, if you can’t glue it. You can’t solder it. I mean, there, there was, there was so many things. Um, we learned through that process, which has informed other designs designs, especially in the natural history and running gun space. Um, and, uh, and, and those, those cameras still have a special place in my heart.

And I’m glad it’s been for. Or four years or so, since we did that production, I think it’s about to release in the next couple of weeks to, to headsets and then online. Um, and w we’ll wait to see how it all comes out, but, uh, it’s you learn? And sometimes those, those, those learnings are very, very painful.

Brett Stanley: [00:26:28] Yeah. And I think it probably quite a steep learning curve too, because you’re doing things that probably no one else could really advise you on. So you’ve got to learn these things yourself.

Casey Sapp: [00:26:38] There’s no podcasts like the underwater podcast. There’s no YouTube playlists. There’s there’s no speaking events. We just had to learn and fail and, and failing fast and feeling forward has been the mantra for, uh, for the last. You know, half decade, and we’ve, we’ve been fortunate to fall into bigger and bigger projects.

Um, and we can tell very, very quickly, if a certain camera or for certain types of production is gonna, is gonna work, are going to be successful. And we want to. Uh, you know, put ourselves in position to help the people which are really doing the pioneering creative work. We want to go alongside them technically.

Brett Stanley: [00:27:22] Yeah, exactly. Um, it reminds me a lot of, there’s a, there’s a documentary called chasing coral. That I saw, I think it was a Netflix thing. Came, came out a couple of years ago, I think. And, um, for the documentary, they wanted to document the, the de the bleaching of the coral. And they wanted to build these cameras that could sit time-lapse cameras that could sit on the reef and, you know, basically document the dying of that reef.

But they had all these troubles of, you know, they had these, these like glass domes, but the diamonds would start to cover with algae. And how do you kind of like, um, Petty cleaned them. And they built these self cleaning systems and all this sort of stuff. And I think they had a couple of times where, you know, they set these up.

So at the TimeLapse going come back, like, you know, like a month later and it hadn’t even worked and it was just month wasted because they couldn’t. Can’t keep checking it all the time. You know, do you, do you come up against those sorts of big problems or you, you basically there the whole time aren’t you like operating the cameras or you’ve got a pretty decent,

Casey Sapp: [00:28:21] The algae problem is, is definitely a known problem with a lot of the time lapse stuff. And we, we see a lot, we see a really an interesting breadth of clients. We have clients working in salmon farms. We have scientists doing deep sea exploration. Uh, we just, uh, deployed. A multi-camera system for 6,000 meters on the new ocean X boat.

And they’re doing live streaming and trying to help the pilots with their navigation.

Brett Stanley: [00:28:53] like a person mission or is it an ROV?

Casey Sapp: [00:28:56] It’s both the system was developed in such a way that it would shoot three 60, uh, and the, the submersible pilot could control it. Or, and we could capture that uncompressed and post-process it later. Or we could do a live stream, a compressed live stream from miles.

Down and, uh, on an unmanned vehicle to, um, view and analyze and diagnose and do all the different things that scientists like to do, uh, which, which are, uh, in, in the deep sea. Um, so it’s, it’s been, it’s used for both and we, we chose the Z cam because it’s a, it’s both a great live streaming camera and a great.

Small good value solution that shoots 60 frames per second 4k raw. And, uh, there aren’t many, it’s kind of a, it’s, it’s a, it’s a magical camera right now in the space, frankly. And we’re, we’re really happy about it. So yeah, so the, we have salmon farms. We have folks who are doing we’re on fishing trawlers.

We have folks who are sending these cameras in this space. We have Hollywood, we have military and, and, uh, and, and Jeremy, Jeremy, and I. Uh, share these different clients and projects and work on them together. And there’s there’s findings and learnings from, from each of them. And. We don’t have the algae problem in Hollywood.

Uh, most certainly, but we do have the problem of high data rates and a tremendous amount of footage that you have to process quickly. And, and that’s, that’s something which is useful, um, more and more for what’s a new Avenue called light field or volume metric capture. It’s it’s. How, how do you get. This data through the pipes so that you can actually do something with it almost in real time.

And doing underwater is 10 times as hard.

Brett Stanley: [00:30:50] And so volumetric, I mean, you speak about the light field stuff and that’s basically kind of mapping an area based on. The way the light comes back to the camera. Like you can actually kind of get a, a three D representation of the scene. Is that right?

Casey Sapp: [00:31:03] There’s a, there’s a small difference between. Light field and volume metric, and you’re referring to light field and the, and the major differences, just data and how many cameras are capturing. Something, but if you can get a mesh and a texture and the volume of an object, you are doing something which is volume metric and, and that’s, you can see those models on Sketchfab.

You can use them for measurement. They can go into unreal engines or came engines, um, and for, for different types of, uh, of work. And then light-filled is the next step up. And we’re not quite there yet. And it’s okay. Actually getting the most photo, real representation of, of an object. And it’s, it’s an insane amount of data.

And in trying to do something, which is bigger than a tennis ball has its own unique problem. And, uh, and there’s a couple guys, uh, around the world who are really pushing this medium forward. Um, we’re, I can’t talk too much about who those people are, but, uh, but light-filled above water is hard.

Light-filled below water is. Even harder, but if you want to digitally preserve something or if you want to see something in the most accurate representation, a light field is the dream. That’s the goal. But if you can just get a mesh in a texture and at least kind of see how that thing looks and maybe add animation and, um, and, and movement to it later, that that’s great too.

And a lot of people have used for that.

Brett Stanley: [00:32:39] So, so let’s talk about your, your post production, because I mean, you’re taking all this raw footage, um, which I, I guess has a pretty decent dynamic range. And then you need to create that into a stitch. File. Which basically means that if you are using a VR headset, you can turn around and it looks seamless.

Like there’s no joins. How does that work for you? If you’ve got like three 60 and you’re underwater and you’re looking through a, you know, a plexiglass dome, what what’s involved.

Casey Sapp: [00:33:06] So the typical methodology and postproduction process for raw high resolution footage is you capture it. You offload it with Thunderbolt three or some of the NBME drives. And you try to get that office as quickly as you possibly. Can you take those clips and you throw them through a software called, and this is the one 80 and three 60 workflow.

Uh, and you, you put those into mistakes. Okay. Which is a, an optical flow stitching software. And what, and you pump out 4k, low Rez. Files with a lot on them to review you, figure out, did all the cameras work, you know, what, what are the frames that you want to work with? You know, what’s going to make it to the edit.

What do you need to throw away? And you can review those in a headset. You can review those in spherical video players. You can just view them as just an awkward rectangular file from there. You take that and put it into an editing software, Adobe, or, or something or DaVinci, and you start to make the edit and you start to make the story.

And you add, you know, the audio and some of the high level kind of previous CG work or graphics. And then once you’ve chosen the frames that you want to work with and you decide, you know, the exact selects. Then you hand those to a VFX house or someone who knows how to do the post-processing and the real fine stitching, which which can be done in Mystica or, or nuke or fusion with, with, uh, which is owned by black magic and get into the heavy Rodo and paint stabilization that’s needed to make these things look pixel.

Perfect. um, and then you render out. The file at whatever resolution you need and save the master. And, uh, and so it’s, it’s very, it’s very similar to a VFX workflow where you’re just working with friends and you only want to touch the frames that, that are important and just throw everything else out because it’s, um, every friend is.

Is multiplied by 12 cameras or 20 cameras or however many. Um, and, and, and trying to, to even review those edits on them is, is an extra step and an extra amount of time, uh, because, uh, if you’re going to, for example, if you need to review an edit of an AK file, And you need to re review that 10 times. Like how fast do you think you’re going to be able to get that done?

You need to allow the, the, the necessary time a couple of weeks or months to view, make fixes review, make fixes. Um, and it’s, it’s like a. It’s like a heavy CG movie, you know, just,

Brett Stanley: [00:35:59] Yeah,

Casey Sapp: [00:35:59] mean, it’s, it’s, it can’t be done quickly.

Brett Stanley: [00:36:03] no, it’s very detail oriented and very, I guess, tedious, but it’s, it’s, you’ve got to get in there with a fine tooth comb and make sure it all works. Cause it’s, it’s so much data and it’s such a wide viewing angle, I guess.

Casey Sapp: [00:36:15] Yeah. People, people notice the pops, they notice the stitch lines, they notice the errors there. Their eyes are drawn to that. And you need to make a multitude of cameras look like one, and it’s not an easy process. And, and, and so the magic, you know, is really not about the cameras. You would think that if you build the, the most ideal array that.

Everything’s going to work downstream. It’s just not true. The magic is having very informed, experienced subject matter experts at each step who are going to identify problems, deal with them. Um, and, and for example, Just as, as one story. Um, we, when we were working on that 60 GoPro SeaWorld project, we tried to outsource some of the postproduction work where they do all the template creation and stitching and.

They, there was a house or the effects house we were working with that was throwing 50 or 60 people at a single shop. And they weren’t. Getting it to look right. And we were, we were quite disappointed. And what we realized is they weren’t actually, they didn’t know how to actually align the cameras correctly at the very first step and do the template creation.

So they were having to do an extra amount of roto and comp and paint to, to, to make up for that. Whereas we found someone, uh, and we brought someone in house. Who did the template creation correctly. And we were able to turn these scenes around in a couple of days, so it with one person. So it’s, it really matters who the people are that you bring in and who the team is.

Otherwise you won’t finish.

Brett Stanley: [00:38:00] I mean, cause it is like putting together a jigsaw puzzle. Right. If you don’t know what that jigsaw puzzle is meant to look like. How do you even know how to start?

Casey Sapp: [00:38:07] A hundred percent accurate. You nailed it. That’s exactly right.

Brett Stanley: [00:38:10] Um, that’s awesome, dude. Um, Um, and so in terms of operating these cameras, like if you are the underwater operator for one of these, one of these projects, what are the range of things you have to do? Is, is it just like a normal camera operator and they just basically running through the scene or are they going to keep things in mind while they’re down there?

Casey Sapp: [00:38:30] So the first thing is that as you’ve probably heard an under underwater podcast is the diving has to be just spot on the, the experience has to be just unknown quantity and. I typically am not going to work with guys who don’t already have rebreather experience or at least a thousand or a couple thousand dives under there.

Um, and, and then it’s important that they have camera op experience with reds or, you know, high, high end cinema cameras so that they actually understand, um, how to get the most out of these cameras. that that ended up itself. Is is kind of hard to find actually. Um, and then yeah, having a team member, which you enjoy and can spend time with, and you’re going to bunk with and travel all around the world.

And, and a lot of these guys can be found, you know, in the blue chip, natural history. Productions and, and, you know, the BBCs anything with has planted in it. Um, w there’s also a scholarship called the Rolex scholarship where a lot of these guys I’ve gotten that diving experience and camera experience out of the way.

Um, it’s, it’s not, it doesn’t limit us. We’re, I’m very open to working with anyone. I wish that there were enough projects where I could pull in, um, a lot more folks and build a bigger, tighter network. But there’s only in terms of these projects. There’s only a handful every year, you know, 10 20 at most.

and so you gotta go with guys who you’re familiar with, who you’ve built a lot of comradery with and who you don’t have to worry about underwater sometimes even being alone, because like we talked about earlier, if the more people that are in frame, the more you’re taking. Yourself out of that kind of natural history experience, you know, you can’t have five safety divers in the water and not have your eyes drawn to that.

So there’s, so there’s a number of different considerations around how many people you want to have in the water. Um, you need to have small teams and yeah. And you can’t, you can’t recreate. Uh, there’s no green screens in three 60, you need to go to the lakes and the oceans. Like if you’re going to feel someone drowning, you, you actually have to find someone who can fake their drowning pretty well and handle that.

And you need to, and we’ve done this before. You need to drive out a couple of miles, find blue ocean. Put weights on them and they’ve got to be a really good swimmer and there’s no stunt divers. There’s, you know, there’s the safety divers around like that. Everything has to look real. Otherwise you’re going to be spending a ton of money trying to paint these people out.

Brett Stanley: [00:41:07] well that’s, I mean, that’s, well that’s, I mean, that’s,

that’s the thing, isn’t it. The, if you’re doing like a one 80 or like a, just a regular, like one directional camera, you can hide everything behind the camera. But if you’re doing three 60, there’s no way to hide.

Casey Sapp: [00:41:21] there’s no hiding.

Brett Stanley: [00:41:22] Even the operator, I guess, is that’s the only thing you really want to paint out.

Casey Sapp: [00:41:25] That’s right. And some people don’t have the money to, if they, they often, if they did have the money, they would do it. There’s different tactics to, to making that easier in post, but you can’t hide anything. Everything has to look real. And, and so a lot of these. Projects are national history based, man arrays, large animals.

What would be considered dynamic megafauna? Um, there are some cinematic. Kind of framed experiences with talent, but, but they’re difficult. They’re really hard. And, and, and, and people often, um, they’re just, they’re just difficult. And, and I only see maybe one or two a year, um, especially for underwater work.

Brett Stanley: [00:42:08] w so you’re talking about narrative features, feature kind of work.

Casey Sapp: [00:42:12] That’s right. Yeah. Well, maybe we’re a talent is, uh, pushed off a boat and is drowning or dancers or, uh, some type of like underwater ballet. That’s that’s framed and, um, You know, a lot of folks, when they look in these headsets, like they don’t want to see a pool wall. They want to see open ocean. They want to see features and ambient environments and you can’t recreate that.

You can’t, you can’t green-screen that. So it’s, um, everything has to be real, uh, to really capture.

Brett Stanley: [00:42:44] Especially if you’re, if you’re so immersed in, in like the headset and everything, like it’s, it’s, it’s touching your eyeballs. There’s no distance between you to kind of hide stuff.

Casey Sapp: [00:42:52] That’s right. Yeah. So running gun is very typical in these types of shoots, um, underwater, just capturing the naturals of, in wonders of the world. And there’s a certain process and a certain type of camera that you have to build to be ready for that you can’t have all of the. Wave forms and preview displays and, you know, tethers that these guys Hollywood, um, have become accustomed to and have perfected in a lot of ways, which I have a lot of respect for.

I wish we could do that in this space, but. It’s uh, at the very most, you can, you can get comms and a mask, but, but you, but you can’t really bring the director down to the scene. Um, they’ve just got to review this stuff later.

Brett Stanley: [00:43:41] But what have you got coming up? Is there anything you can, you can kind of allude to, I know you’ve probably got some, some highly secret stuff going on, but is there anything interesting coming up?

Casey Sapp: [00:43:50] Um, yeah, we, um, we’re building cameras. We’ve got large led projects over the next. Couple of years that are, um, releasing all over the world.

And we’re, we’ve got a few military projects as well with, with ROV. So we’re doing a lot more ROV. I would look for into the now I don’t know when it’s going to come out. I don’t know how it’s going to release. I don’t know Michael Mueller is going to do, but he’s done some really groundbreaking work. And It was, it was one of the most ambitious projects of the last decade in the three 60 world. And I would, um, just keep your eyes out for that. Whenever it hits.

Brett Stanley: [00:44:30] Yeah, I will. I think that there’s a trailer on your website. I will link to that or embedded in the. Yeah. In the show notes for people

Casey Sapp: [00:44:37] Cool,

Brett Stanley: [00:44:37] Thanks, Casey. It’s been awesome.

Casey Sapp: [00:44:39] Brett, thanks for having me. It’s good to chat with you. Um, it’s good to, uh, be a part of this, of this podcast and some of the other giants in the industry, um, who I’ve enjoyed listening to your episodes. I really appreciate the time. And look forward to speaking with you again.

Brett Stanley: [00:44:56] you take. Casey. Thanks so much, man.